ECS to EKS: Why Our Team Made The Switch

Justin Anderson, Lead Software Engineer

Published December 1

This year, Kepler's Technology and Data Science (TDS) team transitioned our container orchestration from AWS's Elastic Container Service (ECS) to their Elastic Kubernetes Service (EKS). This post will briefly review the factors that went into the decision to change the way we schedule and deploy containers.

First, some context:

The TDS team at Kepler builds and maintains a platform of mostly-internal marketing tools called the Kepler Intelligence Platform (KIP). This includes around 30 APIs and frontends, as well as several dozen scheduled data ingestion and cleaning processes. Given the simplicity of our infrastructure and the predictability of our traffic, we had long considered Kubernetes to be "overkill" relative to our needs. This year, however, several factors influenced our decision to make the switch.

Flexibility

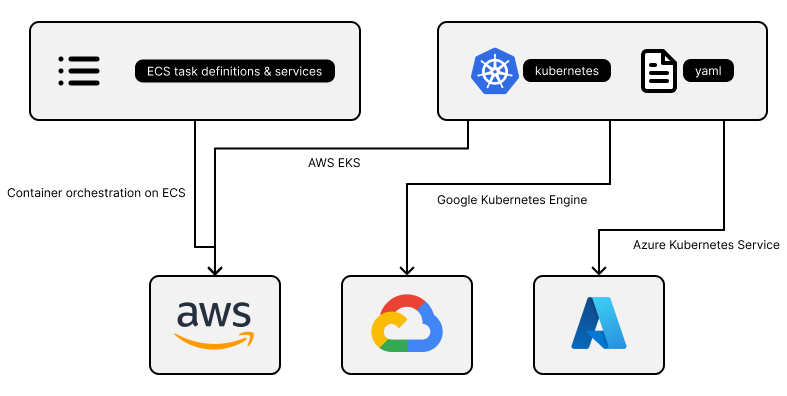

ECS deployments required AWS-specific configuration that were not portable to other cloud providers

By far the biggest factor in our decision was the flexibility of Kubernetes as compared to ECS. As we mature as a company, we anticipate the need to support multiple cloud providers. Each of our ECS deployments came with a significant amount of AWS-specific configuration in the form of ECS Task Definitions and ECS Services. The hard work we put into fine-tuning these configurations is not portable. If we ever wanted to run these workflows on GCP or Azure, we would have to start from scratch with their solution.

By contrast, Kubernetes is open source, and the major cloud providers all provide managed solutions to run Kubernetes on their platforms. Google Cloud provides Google Kubernetes Engine. Microsoft Azure provides Azure Kubernetes Service. The Kubernetes manifests we've written to deploy our apps on EKS would port nicely to either of these competing cloud providers.

Of course, moving to EKS doesn't immediately allow broader migrations, such as a complete provider switch. At the end of the day, EKS is still an AWS service, and we lean on many AWS-specific implementations. However, the move to EKS has greatly reduced the "intimidation factor" of supporting multiple cloud providers.

Community Support

As mentioned above, Kubernetes is open-source, and can be run on most cloud providers. This has resulted in a thriving community of users across a wide variety of use cases. Aside from the obvious troubleshooting forum help, the community has also generated third party tools and add-ons that we've used in our transition from ECS to EKS. As ECS is a proprietary tool, it provides less in the way of community-generated custom tools.

For example, we run our Continuous Integration/Continuous Deployment (CI/CD) pipelines with Github Actions, using self-hosted runners to perform testing and deployment tasks. Running these on ECS required a non-trivial amount of customization, and resulted in a somewhat basic and inelegant solution. Fortunately, the Kubernetes community had developed a custom solution to this common problem, the Actions Runner Controller.

The Actions Runner Controller is a Kubernetes add-on that manages self-hosted runners for you and queries the Github API to determine if more runners are needed (autoscaling). To build this flexibility into our ECS deployment would have required a significant amount of custom work. With the help of the Kubernetes community, we were able to easily replicate what we had done in ECS, AND improve our solution.

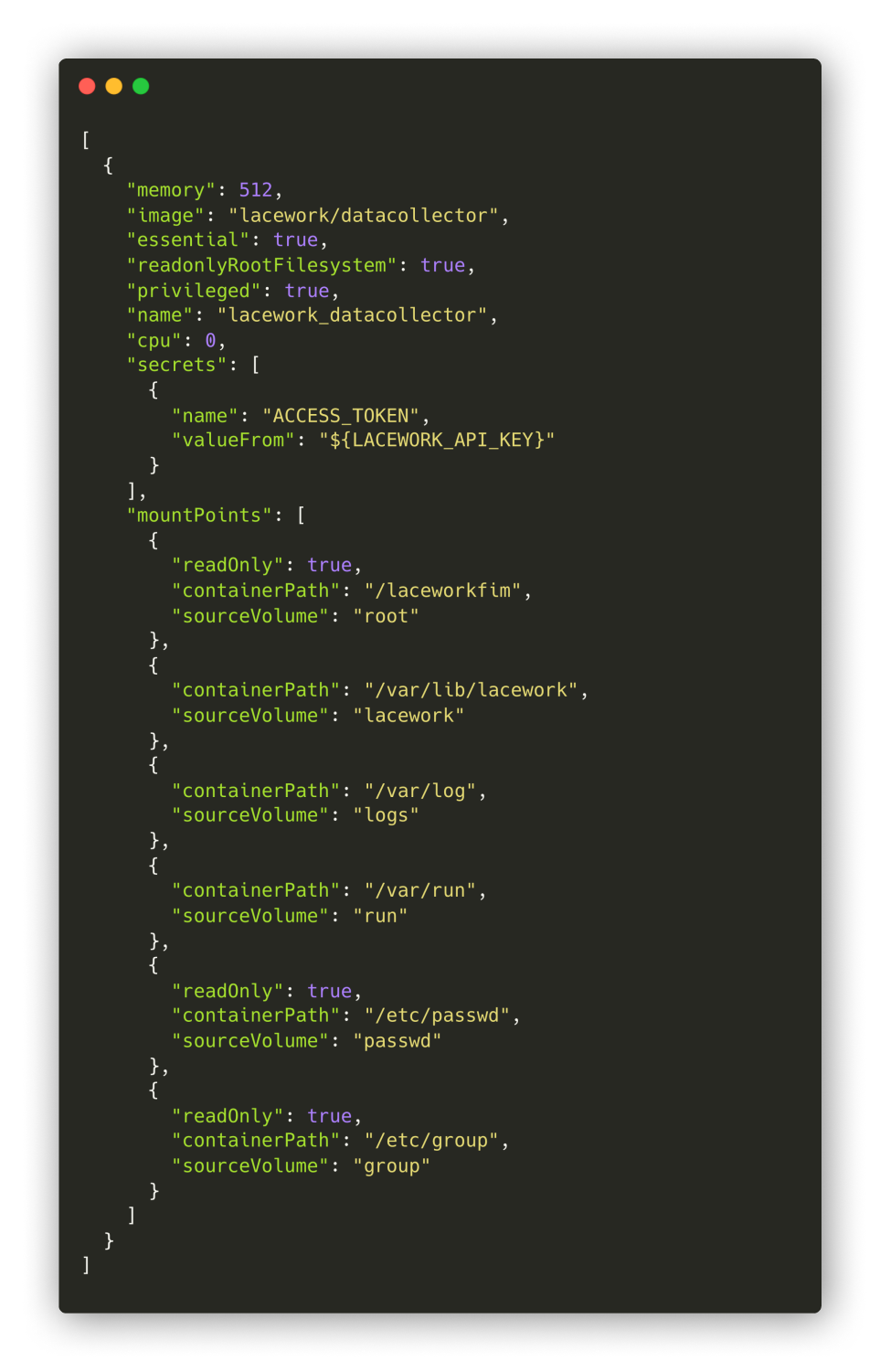

Another way in which we've benefited from Kubernetes community support is via Helm, the Kubernetes package manager. We run several vendor agents in our environment, such as Lacework, for cloud security monitoring. On ECS, we leveraged Lacework's custom Docker image to run a containerized agent, but this required customizing many container configuration options, and deep-diving into the documentation to do so.

An example of a Lacework task definition

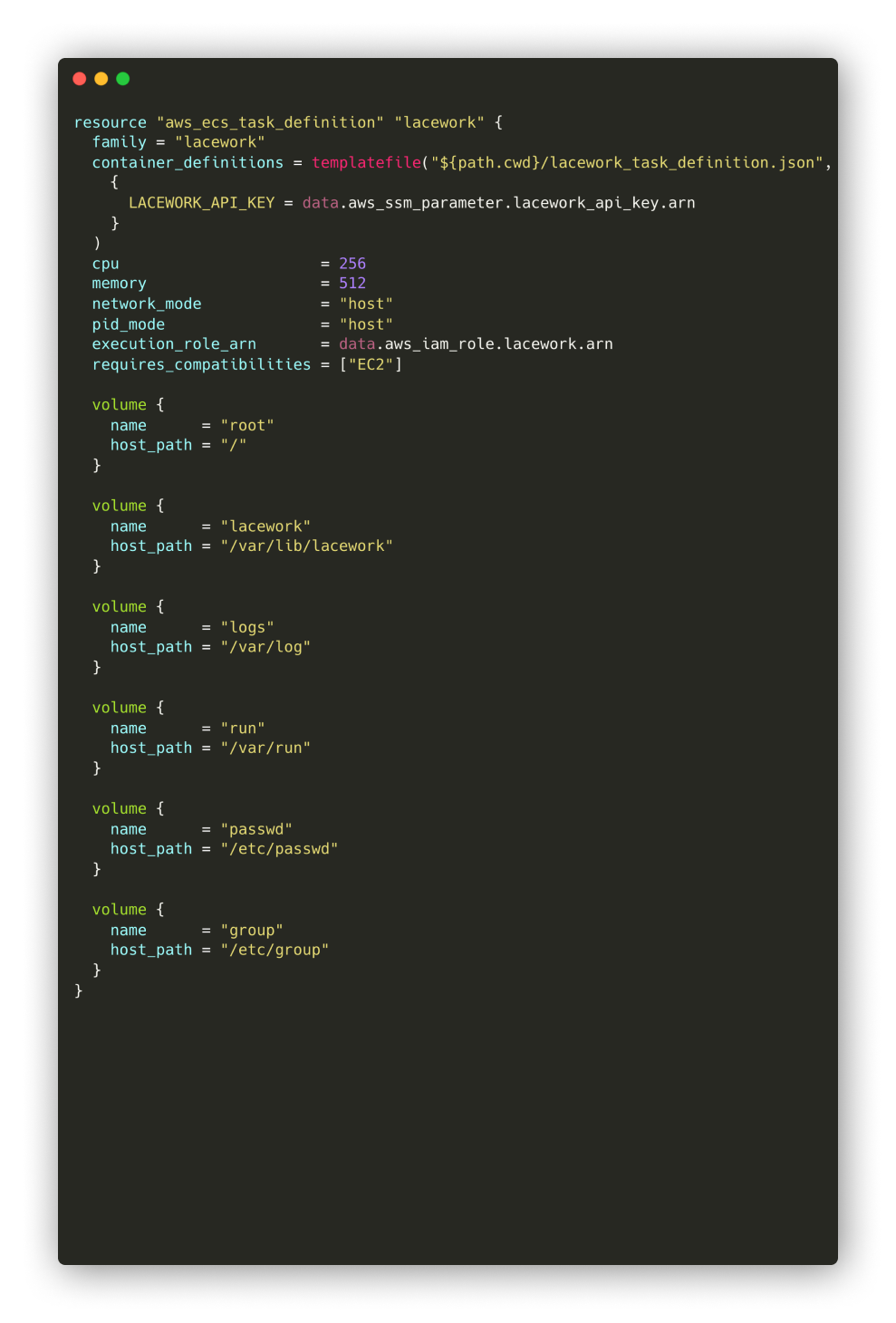

An example of a Lacework terraform file

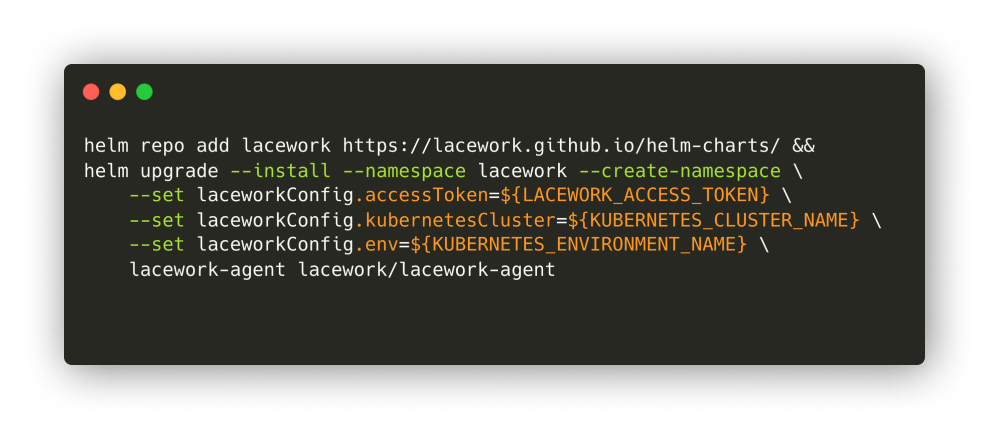

By contrast, Lacework supports deployment on Kubernetes via a Helm Chart. As seen below, all that is required for us to deploy on EKS are two simple commands, and the Helm package manager deploys all containers that we need, properly configured.

The commands used to deploy on EKS using Helm

The increased community support of Kubernetes was a major reason behind our move from ECS to EKS, and to this point has paid off.

TBD - Increased Developer Participation in Deployment

A third motivating factor in our switch to EKS was to better include developers in the deployment of their apps. I've caveated this with "TBD" in the header, as we don't yet know exactly what this will look like. Still, I think it's useful to review our thinking in this regard.

When we ran our apps on ECS, deployment and container configuration were managed by our centralized DevOps team. One reason for this was we deployed ECS Task and Services using Terraform. Terraform is an infrastructure-as-code (IAC) tool, and cloud configurations are described in Hashicorp Configuration Language (HCL). A certain threshold of HCL knowledge was required to deploy this ECS-specific infrastructure properly using that tool.

Additionally, we managed all Task and Service configuration centrally, in a repository controlled by the DevOps team. This was to take advantage of the resource-referencing and sharing abilities of Terraform. Almost by default, app deployment configuration became a DevOps task.

We are still in transition here, and our workflow with EKS currently matches that of ECS. However, our hope with Kubernetes is to place more control of deployment in developer hands. For one, Kubernetes deployments are configured in YAML manifests, a much more approachable format that should require less of a time commitment to understand and determine how an app should be configured. This applies not only to the deployment details, but to Load Balancing as well (this topic to be discussed in more detail in a future blog post).

Additionally, given that apps are now deployed by interacting with the Kubernetes API, rather than any shared Terraform state, there is less of a benefit to centrally managing these files. As a result, we hope to move application Kubernetes YAMLs into the application repos themselves. This will align deployment configuration with application code, and emphasize that understanding how our apps work implies also understanding how it is deployed.

Now that we are fully up and running on Kubernetes, these associated process changes are the "next step" in taking advantage of the move to EKS.

Conclusion

When we decided to move from Elastic Container Service to Elastic Kubernetes Service early this year, it was in the hopes that we'd benefit from 1) more flexibily, 2) a robust supporting community and 3) a deployment process that developers could participate in more fully. So far, we feel like this has paid off, and are excited to continue down this path.

Stay tuned for more posts (and greater technical detail) about our transition from ECS to EKS!